The Mariani-Silver Algorithm for Drawing the Mandelbrot Set

This is going to be another one of those “I better write this down before I forget” stories. Plus I get to talk about an algorithm that was named after me so what’s not fun about that?

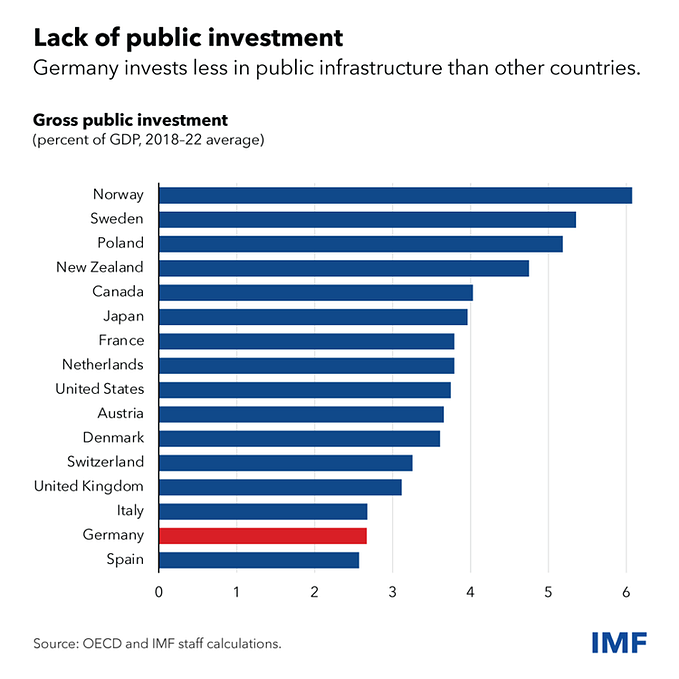

First a motivational picture :D

OK so what is the Mariani-Silver algorithm, why is it called that, and why does it mostly work? That’s what we’ll talk about today (Sabine Hossenfelder would be proud of this intro).

What is the Mariani-Silver Algorithm?

Briefly, it is a way of plotting the Mandelbrot set more quickly. It sometimes makes mistakes in that it might produce a result that is different than the brute force method but typically these mistakes are very small and even when it’s “wrong” it still makes pictures that are arguably just as cool with significant speed-up.

The algorithm is this: if you ever find a bounded region of pixels that are all going to be “the same color” (we’ll get into what that means later) then you can safely fill in the entire region without computing the interior. In the first version that I made that used this property, the algorithm started by drawing a rectangle around the whole screen and then making a “plus” shape to divide the screen into quadrants. It tested each quadrant’s border for constant color (which invariably fails at first), and then recursed on each quadrant until every candidate rectangle was either filled because it had constant boundary, or a minimum rectangle size was reached and the algorithm switched to brute-force.

When I did this work I was at The Ontario Science Centre (OSC) working in their computer lab. My manager Katherine (“kat”) knew Kee Dewdney who was then curating “Mathematical Recreations” or “Computer Recreations” (I don’t remember when it changed names) for Scientific American. Kat encouraged me to contact Kee, which I did. Kee put me in contact with Rollo Silver who was creating high quality images of the Mandelbrot set on slides. I don’t know for sure but I imagine Kee was getting images from Rollo for his talks or for publication or whatever. Or maybe they were just friends. In any case, Kee forwarded the algorithm (with permission) to Rollo who then contacted me directly.

Rollo’s first implementation of the algorithm was for a Mac, and it was similar to mine — it also used rectangles, but his version divided the rectangle first horizontally, then vertically, and so forth, creating two halves in the recursion instead of four quadrants. It’s not clear that either choice was especially better but any region works so, “pick something easy and go for it” was the plan.

Both versions offered potentially tremendous speedup. Sometimes “six times faster” is cited but that’s bogus: the speedup can be as big as you want it to be — it entirely depends on what region and iteration count you pick. You can choose a region with no speedup or you can choose a region with 100x speedup. But it’s fair to say that typical “pretty” regions usually get 5x improvement or so. Or they did anyway when I was measuring such things over 35 years ago.

Why is it called the Mariani-Silver Algorithm?

Well, it wasn’t at first. I of course didn’t call it anything. It was Dewdney that gave it a name when he published an article on it in I think 1989 — maybe the February edition — of Scientific American. I have a copy somewhere. He called it “Mariani’s Algorithm.” [edit: a friend confirmed it was the Feb 1989 edition — thanks Tom.]

I had largely nothing to do with the algorithm after that, by then I was working at Microsoft, but at some point it started being called the Mariani-Silver algorithm. Maybe because of changes Rollo had been making and publishing, or maybe because of advocacy, his name was starting to be associated with it as well. I can’t be sure how it happened.

I noticed the name change many years after the original article and I was rather pleased about it because while the particulars of the recursion, the traced shapes, and other parameters, have been changed over the years, the basic algorithm is what it always was — those possibilities had always been there. The reason I thought it was great that Rollo’s name was on it was that, back at the start of all this, Rollo finished the proof of correctness!

Why does it (mostly) work?

OK, at this point I’m going to introduce some notation:

M : the Mandelbrot set: i.e. the set of complex numbers where iterating z = z²+c remains bounded no matter the number of iterations

M(n) : the set of complex numbers where z = z²+c is bounded after n iterations, specifically the result of n iterations has modulus less than 2.

p(n) : the complex polynomial (in the variable c) created by n iterations of z = z²+c starting from z=0; i.e.

- p(0) =c

- p(1) = c²+c

- p(2) = (c²+c)²+c

- p(3) = ((c²+c)²+c)²+c

- etc.

m(n) : means M(n)-M(n+1) that is that part of M(n) that is not also in M(n+1), more formally it’s M(n) intersected with the inverse of M(n+1).

Note that M(n+1) is always a subset of M(n) (n ≥ 0) by construction.

So, the important observation is this: You can’t draw M. Nobody even tries to draw M. They always draw M(n) for some n, often a large n. And indeed you usually don’t even try to draw all of M(n) you draw some piece of it because zooming in makes good pictures.

Now M is a nasty fractal beast with bizarre properties. Wow, good thing we’re not trying to draw that monster. M(n) on the other hand is completely defined by p(n) and while p(n) can be big, it’s a polynomial. And polynomials are wonderful well behaved things with lots of lovely useful properties.

Now I’m going to offer the following “proof” of correctness of an algorithm that I know not to be correct. So bear with me, the actual algorithm only approximates the truth described below. And as proofs go this will be a sketch rather than a proof because this article is already too long.

First, when drawing an approximation to M, in addition to plotting M(n) for some n, usually in black, we also draw m(i) for all 0 < i< n in differing pretty colors.

So let’s consider just the business of drawing M(n) where n is our desired iteration count.

Suppose we find a region R with boundary r and all points in r are in M(n). This means by definition that: |p(n)(z)| < 2 for all z in r.

In terms of the algorithm, it means that we found a bounded area (like a rectangle) and all of the points on the boundary have an iteration count of n, the limit for this rendering.

The Maximum Modulus Theorem (MMT) says:

Let G be a bounded region and let f be holomorphic in G and continuous on G and its boundary. Then |f| attains its maximum on the boundary of G.

I knew that much complex analysis and I knew that it meant if you found a boundary r where |p(n)(z)| < 2 for all z in r then |p(n)(z)| < 2 for all z in R.

Why?

p(n) is just a polynomial. Polynomials are wonderful beautiful things. They are continuous everywhere, holomorphic everywhere, it doesn’t get any nicer than polynomials. So if p(n) is bounded on r then it meets the criteria for the MMT and its maximum modulus occurs on r. Since all of the values on r have modulus < 2 then all the values on R, the “inside”, also have modulus < 2.

For the algorithm, that means we could fill the inside of R with “black” having only computed values for the border r; any border of any closed region.

But here’s the rip — the math requires p(n) to be bounded on all of r but the algorithm only samples finitely many points! So this can go wrong. And it does. But let’s put that aside for now.

When I wrote to Rollo the above was as much of the proof as I had. I conjectured that you could use the same trick to plot m(i) for 0 < i < n; but I didn’t have a proof of that (let’s call that proof Part 2). I was trying to use MMT with 1/p(i) but that wasn’t working. Turns out I was barking up the wrong tree.

At this point I have to make some disclaimers. What I remember for sure is this:

- I didn’t have a proof for Part 2 back then

- Rollo provided a proof a few weeks after I wrote him

- I understood his proof well enough, it was obviously correct, I suspect he shared it with Dewdney and others, I recall it being pretty simple

- I don’t remember Rollo’s proof, so I’m going to offer you my own proof of Part 2, however

- it’s entirely possible, even likely, that although I don’t remember Rollo’s proof that it’s still rattling around my head somewhere and what I’m about to do is repeat Rollo’s proof

- on the other hand, I might botch this next bit in which case any mistakes are mine

- only Rollo could tell me if this was his proof or not

OK the proof of Part 2, that shows you can use this technique to help you draw m(i) for i < n

Suppose we have r, the boundary of a closed region R and all the points of r are in m(i) for some i<n and thus not in m(j) for any j > i by construction.

This corresponds to the situation where a typical Mandelbrot algorithm has found a boundary of solid color of one of the colors for an earlier iteration, not “black”.

So let’s consider the possible dispositions of this region R.

It is trivially true that one of these three options is the situation:

- R does not overlap M(i+1) at all

- R partly intersects with M(i+1)

- R completely contains M(i+1)

Case 1: If R does not overlap with M(i+1) then we can argue exactly as before, because |p(i)(z)| < 2 for all z in R.

Case 2: If R does partly overlap with M(i+1) then it cannot be the case that R is strictly inside of m(i) by construction, so this case cannot happen.

Case 3: Rollo ruled out this case by requiring that we never apply the optimization to any region R that completely contains M. We note that M is a subset of M(i+1) so if R completely contains M(i+1) it completely contains M. We are barred from applying the optimization if M is a subset of R.

It’s not at all onerous to arrange for Case 3 to never happen, you can simply ensure that you never consider any region R that is big enough (raw dimensions) to hold all of M. This is usually trivial because the algorithm typically begins zoomed in such that the entire region under consideration is much smaller than M. But if there is any chance that the region being drawn is bigger than M you could simply divide the region into e.g. 4 rectangles with (0,0) on the common corner of the 4 rectangles. Or you could tile the original region into smaller regions such that none of the smaller region can possibly hold M because all have dimensions that are too small.

With these two parts in place, the remaining problem is that we only sampled finitely many points when concluding that we had found a candidate boundary r, which should not suffice. Yet the algorithm still works pretty well! Why?

Well p(i) is very well behaved for all i. In particular p(i) is continuous. This means that if |p(i)(z)| < 2 for some z then there is a neighborhood around z where |p(i)|<2. Now let’s consider that we have those finitely many points sampled around the border of a region, each with bounded modulus. If those points are “pretty close together” and p(i) is “not changing too fast” around the sampled region then those neighborhoods are likely to overlap. And if they do overlap, then we really do have a continuous border around a region and we could legitimately use this technique.

Consider also that if p(i) is changing very quickly the likelihood that you would sample nearby points and find the same iteration count at all of them is likely to be low. For most parts of most plots the outer areas change fairly smoothly. Also, below a certain size region the optimization isn’t even attempted. So the failing case is a large region that maybe abuts some area of the picture that changes rapidly and just-so-happens to miss observing any iteration changes with its finite samples. In practice this doesn’t happen very much at all — pictures tend to zoom in enough so as to look nice at their iteration count rather than look like they have a lot of crazy mandelnoise. When “zoomed in”, each pixel effectively only needs to stand in for a small neighborhood, so the algorithm is less likely to run into its own weaknesses.

Over the years I talked to many people about rendering M, and it’s very clear that many people had figured this trick out independently. Maybe some before me, I dunno. The fact that it has my name on it is pretty much a fluke of me being at OSC, and Kat knew Kee, so it got published. And of course Rollo finished the proof and probably did a bunch of work to make people aware of the thing. I remember being pretty astonished seeing the algorithm in print, I wasn’t told in advance or anything. I remember a friend at MS saying to me one morning, “is this you?” referring to the article… and I had no idea what he was talking about until he showed me.

And that’s pretty much it.