Virtual Memory, Segments, Paging and Big Pages

Foreword

I’ve had a lively discussion the last few days on X (formerly known as Twitter). I learned some stuff and I thought it might be useful to try to share it. It all started with an innocuous tweet.

I know enough about virtual memory management to be dangerous but the details of why one thing or another might or might not be happening in Windows is far too deep in bowels of the system for me to know precisely. But because (a) Casey doesn’t just complain about things because he’s feeling like it, and (b) I was very curious myself, and (c) I thought this was a solved problem, and (d) I knew who to ask, I decided what the heck I’ll dig in.

Now to be clear I could have gotten a lot more information from people I know in Windows, but really I didn’t want to. The last thing I want to do is turn this into an article on Microsoft’s Official Position On Two Megabyte Pages. If you want that, read no further this isn’t it. This is going to be another of my educational pieces on perf stuff and as usual it’s only going to be approximately correct. Because as usual being very correct would make this article hella longer.

Also, I’m going to interpret a little bit about what I think Casey is getting at based on our thread but I might get that wrong because that’s me interpreting things. So, anything I say about his opinion or other participants, take it all with a grain of salt. @sasuke___420 also significantly participated in the conversation and that’s reflected in the below as well.

Introduction

I thought I would begin with a primer on Virtual Memory to put this into context. Because a person can barely even understand the question if they don’t have some details and there’s probably people out there who would like to know more about VM (I’ll use VM to mean virtual memory here not Virtual Machine).

Casey’s question hits to the heart of VM systems and many of their considerations, that’s why it’s so interesting.

Virtual Memory via Segments

I learned about VM systems almost 40 years ago and the first type of virtualization I was taught was segmentation, this will be relevant, trust me.

The idea with segmentation is that the memory of a process is divided into some number of continuous segments. Each segment has a mapping from the address in the process, the virtual address, to the address in physical memory. The segments can be whatever size the program need them to be.

A virtual address might look like this:

[ 4 bits for the segment number| 28 bits for the segment offset ]

In such a schema there are up to 2⁴ segments with size up to 2²⁸. That is each segment can be at most 256M. I picked these numbers at random. For this article I will use 32-bit virtual addresses and 64-bit physical addresses just so it’s clear which is which.

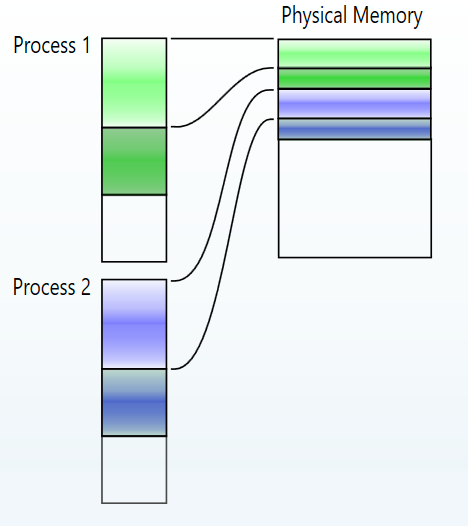

So, we might end up with a picture that looks like this:

As you can see, I squeezed the physical memory to kind of show that there’s a lot of it. The blank areas represent unused memory. The colored areas could slide around anywhere in the physical memory.

Now here’s the thing, to understate the situation, segmented virtual memory is not that popular. Why? Well, lots of reasons.

- The segments could be big and so swapping one out could be mightily expensive. A 256M swap!

- The segments are capped in size so if your program needs an allocation bigger than a segment then life sucks. Famously the 8086 and 80286 processors used 64k segments, which was all kinds of yuck.

- The segments are variable sized, so the physical address space fragments. The OS can only defragment with expensive relocations of potentially huge areas of memory.

Still some systems did use them and if the number of segments is small (like in this case only 16 per process) then keeping track of them is at least pretty easy. Like every other virtual memory system, the processor, or a separate Memory Management Unit (MMU) has to map the virtual to the physical. In this case the MMU would need only 16 registers to hold the base address of each segment. This is what we would call address translation. The registers would hold the base address of the segment in physical memory. Which maybe has to be on say a 1k boundary (I’m making this up again). In that scheme each of the 16 registers would hold something like this:

[ 54-bit base address high bits | 10-bits assumed to be zero, unstored]

So sixteen 54-bit registers would do the job. When the OS changes processes, it simply reloads the registers. And maybe, if needed, swaps in/out some segments for the new process.

Grand right? At least this is pretty easy to understand.

Let’s talk about some of the other things that go wrong here:

- If a process wants to grow one of its segments there might not be room in physical memory to accommodate that, so again the OS has to slide memory around.

- If many processes are running the OS might have to stop LOTS of processes to do the necessary slide, ouch!

- Likewise, if a process gives back memory, there’s no easy way to get it back into use because it might give back a strange size. Physical memory will fragment just like with

malloc/realloc/free. - All the other usual

mallocproblems are present, internal and external fragmentation, etc.

Because of these weaknesses, in modern systems, Segments are only used as logical constructs by loaders and linkers to arrange for related data to be in the same chunk of physical memory and not as an address mapping technique. Sometimes they are equivalently called Sections.

Virtual Memory via Paging

After going over segments, I was introduced to paging which basically exists to get around the problems above. The virtual mapping diagram is substantially the same as before except now the virtual address is divided into equal-sized smaller units. So, of course, there are more of them, and this will be important. A system with paging might look like this:

[ 20-bit page number | 12-bit page offset ]

So here there are up to 2²⁰ pages (about a million) each 2¹² bytes big (4k). Creating 2³²B of addressable memory (i.e., 4GB).

Note: actual page table entries for modern processors are much more complicated than this simplification.

This kind of choice is now the standard way things are done. So, why is this better?

- The operating system only offers one size of allocation, the page size (4k here), no fragmentation ever.

- The OS can page-out and page-in a much smaller unit (4k) to do its virtualization, this is a much more reasonable amount of i/o. Note in such system it’s usually called paging rather than swapping and the backing storage for is often called a page file for the same reason.

- The OS never has to relocate physical memory.

- Management of the backing storage is simpler. It also grows in 4k chunks, the OS can grow it as needed in multiples of 4k and any 4k section of the file can be used by any page.

- Other parts of the operating system that are trying to use memory, like the disk cache, can reasonably get and release memory without causing undue problems in regular allocation patterns.

- Note: I didn’t make a diagram for this setup because literally any page could be anywhere in physical memory. So you can draw any arrows you like. I didn’t see the point.

This comes at a price though. Segments were big and so we only had to manage a small number of them. Pages are small by comparison and so there are more. Many more. In this example up to a million pages. These pages can be stored anywhere in physical memory and so the OS might need up to a million entries mapping each page to its physical address.

Each such entry looks something like this:

[ 52-bit base address | 12-bits assumed to be zero, unstored]

And, of course, that’s up to a million entries for each process! There is no way that we can store this much information in the registers of some MMU. The processor has to use some of its main memory for this. This storage is often what we call the Page Table.

Modern Page Tables are complicated beasts because we want them to be dense, but we also want them to allow for lots of gaps in the mapped addresses. I won’t get into all of that here, suffice to say there is a multi-level groups-of-pages style thing going on to get the final base address.

In a 1TB system (2⁴⁰ bytes), I would need 2²⁸ page table entries (that’s about 256 million of them) to map the whole of memory. If the entries were 8 bytes a pop, that’s 2³¹ bytes or 2GB. And that only maps the pages once!

In a running system, the OS might need more page table entries than the above because several processes have them mapped in different ways. Even the fact that the memory is paged-out has to be represented. And, of course, the disk cache also needs mappings…

That’s a lot of mappings.

When I first learned this my reaction was “What voodoo is this!?! This will be horribly slow!”

With the page mappings being in regular memory, you’re in a bad place. No reasonable system could exist that had to do many reads of normal memory in order to do the address mapping so that it can do one real read or write from that very same memory.

But there is voodoo.

The Translation Lookaside Buffer

The Translation Lookaside Buffer (TLB) is another cache inside the processor. Like the normal memory cache, the TLB is often segmented into a cache for instruction (code) mapping (the ITLB) and another cache for data mapping (the DTLB). It doesn’t have to be but that’s pretty common. And like the normal memory cache, getting a lot of TLB misses is painful.

The TLB holds the physical address of a bunch of pages that were recently mapped. If your code uses the same page many times (common), or other recently used pages, the processor won’t have to consult the page table again. If it does… well, the page table is itself memory and that too can be cached so maybe it will get the page table quickly. Or maybe it won’t. It might need to pay for several reads if the TLB cache misses. Each read might be something like 50ns — so a bad TLB miss might cost… 150ns? Assuming no paging?

That’s bad.

A modern processor might retire something like (roughly) 1000 instructions in that amount of time. So, yeah, real bad.

What would contribute to having a lot of TLB misses?

- Your process uses a lot of memory (data or code) and hence needs lots of page table entries, more than the TLB can hold.

- Your process uses the memory in random ways rather than some orderly fashion (i.e., your access patterns have bad locality).

- Other processes on the same system are doing the above, ruining things for others (bad actors are why we can’t have nice things).

Note that a TLB might be 1 or 2 kilobytes in size. Enough to hold maybe 256 entries or so. That’s not a lot of entries.

How Bad Is This Bad You Speak Of

@sasuke___420 provided the figure below from this paper: https://cs.rochester.edu/u/sandhya/papers/ispass19.pdf about ITLB misses specifically. DTLB misses could be better or worse. The workload also matters obviously. But these numbers are interesting:

[Additional information on Superpage in FreeBSD can be found here]

What we can see from the above is that just ITLB misses might cost you as much as 15% (or so) of your execution in an actual workload. Adding DTLB costs, the situation could be much worse indeed. Naturally, it would be easy to make some synthetic workload that was even worse than the above, or much better than the above, but that’s not the point.

Looking at the data again, we can also see that some workloads have very modest costs, even among these “hard-case” TLB programs. In general, it’s easy to come up with cases that have almost no TLB cost — any small program is basically immune to such issues however many cycles it uses. I have an Othello program I’ve been hacking on for instance, it fits easily into the L3 cache of my computer, and it is not getting any significant TLB costs… It’s not a micro-benchmark but it’s too small. You could write such programs all day long.

So what’s normal in this space? Well, I’m not prepared to say. But bigger programs and bigger data is definitely getting more normal. Even phone apps routinely use a lot of memory. A compiler than runs in 4k — yes that was a thing — is just not a thing… anymore.

Looking at the chart again, we see that another great way save TLB misses is to have fewer TLB entries with bigger pages. Hence that Superpage feature.

Now, armed with this information let’s talk about the original question. Why are 2M pages not more prevalent, easier?

2M Pages in Various Systems

Let’s harken back to the original discussion when we talked about virtual memory with Segments. A lot of those issues start to kind of apply again:

- 2M segments are more expensive to swap.

- A process creating lots of 2M pages might adversely affect memory for other processes so probably additional authority should be required to do so.

- Physical memory gets fragmented and allocating 2M of contiguous memory for a 2M page might be hard. You might not be able to get the memory promptly when needed (assuming hybrid page sizes).

- To work with 2M pages consistently, you might need to relocate other pages, incurring swapping costs in processes other than the one that is paging (again assuming hybrid page sizes).

- You might get a lot of the benefits of 2M pages by automatically consolidating page table entries whenever consecutive pages are found thereby increasing TLB efficacy.

- One big benefit to bigger pages is fewer page faults on initial use, but an OS could automatically load a consolidated page table entry on a big allocation and pre-load without faults (maybe with a hint) even if the underlying memory was not guaranteed to stay as a 2M page. I happen to like this plan.

- A system with just a few different page sizes is not nearly as bad as general sized segments, but you can see that it is already starting to have the same considerations. Uniform bigger pages (e.g. all 2M) has its own considerations which I will likely write about in another article.

Summary and Conclusion

It seems that different operating systems out there are at different stages of evolution on this front. But one thing was clear to me from the twitter discussion. Allocating a big chunk of memory is getting increasingly normal and ordinary boring page management leaves a lot of perf on the table right now. Everyone can agree that faster allocations from the operating system are a good thing. And everyone can agree that fewer TLB entries is a win for everyone. And it seems to me that doing this for code is even harder than doing it for data. Code locality has been a hard problem even from the days when all you could do tune segments in your overlay manager.

Many thanks to all those who helped me to see how deep this particular rabbit hole goes. I didn’t know I was taking The Red Pill when I started but I’m glad I did.

Post Script

I just wanted to add that you don’t have to do crazy things to make this better. For instance, if you just used pairs of pages and merged TLB entries in pairs when possible, effectively creating 8k pages as needed, you would cut the TLB size in half. Just like loop unrolling you get half the benefits you will ever see by doing it once. That’s a page size of 2¹³ bytes. You don’t have to go all the way to 2²¹ bytes (2M) to get a lot of benefit. 16k pages would give you 75% of the benefits. And 64k pages would give you 15/16 of the possible benefits. Plainly, you have to merge twice as much to get half of the benefits that are left, which is a raw deal. I’m sure there’s a sweet spot in there.

Also, one last thing, I have not done nearly enough research to know for sure which of these things are done for sure in which operatings system versions. Not even Windows. This is not an area that I know deeply from my normal practice.

There is even more post-script information in the follow-up article.